Our Cybernetic Future 1972: Man and Machine

I’m dividing my promised sequel to Future Snark into three parts, one each for three very smart views of a future that became our present. These are the anti-snark to that installment’s snark: Vannevar Bush (1945), Norbert Wiener (1954), and John G. Kemeny (1972). I was going to title it “Snark and Anti-Snark” to extend the Toffler joke• further than it ought to go. But these installments are not snark about failed predictions. These are futurists whose predictions were accompanied by important insights into the nature of man and computer, what computerization and computerized communications mean for our culture, and what responsibilities we have as consumers and citizens within a computerized and networked society.

These authors understood the relationship between man and computer, before the personal computer existed. Their predictions were sometimes strange, but their vision of how that relationship should be handled embodied important truths we must not forget. Their views of our cybernetic future focus heavily on not just the interaction between user and machine but on the relationship between computerization and humanity in general.

Surviving the ongoing computer and communications revolution requires understanding that relationship.

- Man and Machine <-

- As We May Blog

- Anti-Entropy

- Entropy in Action

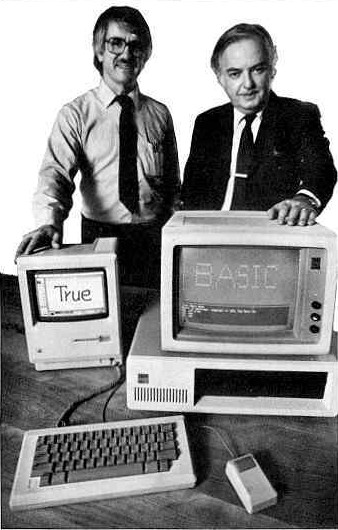

I’m going to handle these authors in reverse order, starting with John G. Kemeny. Kemeny published Man and the Computer in 1972. If Kemeny’s name sounds familiar, you might recognize “Kemeny and Kurtz” as the developers of the BASIC programming language. Much of this book, while it wasn’t designed as such, is an explanation of why BASIC is what it is—a unique programming language unmatched even today as an interactive dialogue between the user and the computer. Unlike most programming languages—including BASIC itself on modern computers—Kemeny’s BASIC didn’t require creating programs in order to get the computer to do stuff. The same commands that could be entered into a computer program could be typed directly to the computer with an immediate response.

In many ways, Kemeny’s vision is the most flawed, despite being the most modern. Kemeny was working with computers used by students in rooms filled with computer terminals. That is, dumb terminals1 that connected to a server. He was almost certainly the only one of these three authors who used a computer to prepare their book. I suspect that as a network administrator, he was too caught up in the trees to see the forest rapidly growing around him.

But he got it right in one sense, and it was revolutionary: his BASIC design was an attempt to solve the problem of man-machine interaction in a collaborative way, where man and computer are partners in an ongoing dialogue.

This is how the language called BASIC was created. Profiting from years of experience with FORTRAN, we designed a new language that was particularly easy for the layman to learn and that facilitated communication between man and machine.

BASIC, unlike all modern computer languages2, was interactive. There is no computer language like it even today—one where asking the computer to do something immediately and training the computer to do something later happens in the same manner. Kemeny made BASIC interactive because of his vision of a partnership with computers. More than any other programming language even today, BASIC was a dialogue between the computer user and the computer. Kemeny’s vision is a primitive form of cyberpunk.

Kurtz and Kemeny show off a version of BASIC for the Macintosh and IBM PC.

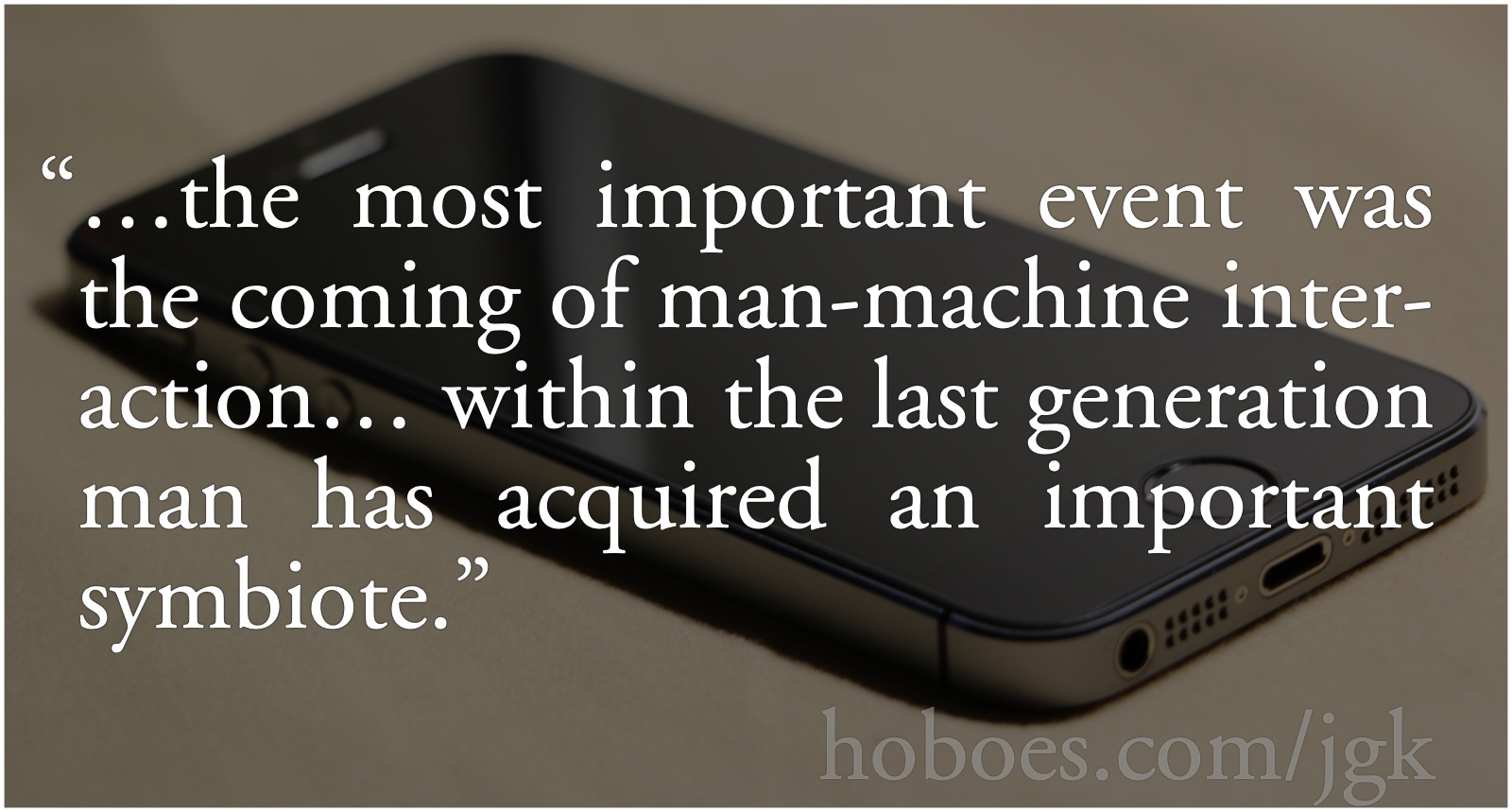

I believe that next to the original development of general-purpose high-speed computers the most important event was the coming of man-machine interaction… It is the theme of this book that within the last generation man has acquired an important symbiote.

You might think from this that Kemeny would have foreseen the development of personal assistants attached to the hip—that is, our modern smartphone. But Kemeny had a very serious bias. He managed a distributed computing center at Dartmouth. Whenever he wrote about the coming ubiquity of computers in the home, he was talking about terminals in the home, connecting to a very small number of computer centers. Computer centers controlled by people like him who could provide great things for their users. In many ways, users would be the symbiote attached to the server.

Kemeny did indeed provide great things for his users. One of his accomplishments at the Dartmouth Computer Center was the development of the Dartmouth Time Sharing System. DTSS made it possible for large numbers of users to connect to the central computer at the same time. One of the surprising features it enabled was chatting between users of that server. It enabled both real-time and turn-based games with multiple participants, “two or more human beings [connected] to the same computer program”.

He saw that this kind of communication, including in games, would be important in the future, and that “…so far we have only the vaguest impressions as to the full power of a team consisting of several human beings and a computer.”

I see absolutely no reason why a very reliable computer terminal could not be manufactured to sell for the price of a black-and-white television set. This will be necessary if computers are to be brought into the home.

He didn’t see that computers would literally be brought into the home, and not merely connected to from the home. Instead of several people and one computer, we now have several computers for every person. Writing this in 1972, he predicted that “Within the next two decades the price will undoubtedly come down to a level which will make computer terminals in the home quite common.”

That was true. By 1992 computer terminals in the home were very common—but they were software running on personal, general-purpose computers. This made a huge difference. Everything from the kinds of games that could be played to the kinds of things people would want to do online follows from the fact that everyone already had a real computer in their home with real processing power. This freed the central computer to do nothing but communications between individuals on the network. It meant that the apps people demand of servers are communications based.

The Internet of today would not be possible in an environment of dumb terminals filtering all communications through a regional server. The immersive video games and environments people play today wouldn’t be possible even with today’s high-speed Internet if home computers were merely terminals.3

Kemeny had no idea that home computers would quickly become ubiquitous:

Most people today grew up when no modern computers were in existence. While the same situation applied to automobiles in the early twentieth century, a fairly rapid change took place. Even if not everyone drove an automobile, almost everyone had a friend who owned one. Automobiles quickly became common on our streets, and their principles of operations were simple and easily understood. Unfortunately, the average person does not have the foggiest idea of just what a computer is or how it works. And, since computers are shielded from them by the high priests of the profession, all their acquaintance is from a distance.

Fortunately, a very similar rapid change was about to take place in which everyone would either own a computer or have a friend who owned a computer.

Kemeny’s response to trends was colored by his bias toward centralization. Dartmouth’s library had been doubling every 20 years or so, which meant that by the year 2000 it would have two million holdings. Rather than predict, “this is going to be awesome”, he instead denied that such a massive influx of books was possible. It “represents an expenditure that the College cannot possibly afford.”

In fact, according to their website, Dartmouth’s library added “its 2 millionth acquisition, The Sine Collection of British Illustrated Books” in 1994, right on schedule, and doesn’t appear to be having any trouble with that many holdings.

Assuming that we cannot possibly weather growth in the future because we cannot handle that potential growth today is a common failing of humanity’s very fallible expert class—it’s at the heart of the Malthusian error that continually predicts starvation a handful of decades into the future—a prediction that its proponents have been making literally for centuries. Like end-of-the-world preachers, they continually push the end of the world back without ever acknowledging they were wrong.4

Malthus himself has been thoroughly discredited, but the mindset permeates public policy today. Whenever some politician or bureaucrat tells us we have to live with less rather than strive to create more, they’re doing so from a Malthusian mindset. If we do get starvation in the near future, it will not be because Malthus was right. It will be because we will have backslid into a Malthusian barbarism that denies progress. It will be because, thinking that our metaphorical library cannot sustain two million books, we make writing illegal.

The most interesting parts of the book, however, are not his predictions but his philosophy of man-machine interaction. Kemeny explored the relationship as if computers were living things with man and computer sharing a mutually beneficial symbiotic relationship. Kemeny recognized that computers are not really living things. He also recognized that this doesn’t matter.

I would like to argue that the traditional distinction between living and inanimate matter may be important to a biologist but is unimportant and possibly dangerously misleading for philosophical considerations.

BASIC was important, and something like BASIC is important today, because a basic knowledge of computer programming is essential not just to effectively use computers but to be an effective citizen in a computerized culture.

The availability of a language as simple as BASIC has made the learning task so simple that computers have come within the power of every intelligent human being, and time sharing has made it possible to have direct communication between man and machine.

Kemeny’s vision for BASIC was almost literally a conversation between man and machine in which the machine could perform its tasks now and also remember those commands to perform the same tasks later. This kind of interactive dialogue between the computer and the user was essential to the rapid adoption of home computers.

I’m not quoting Kemeny merely as an inducement to buy 42 Astounding Scripts—although you should. An effective dialogue with our personal computers is essential to the effective use of computers today. Without that knowledge, we have no understanding of what computers can and cannot do for us. And, to paraphrase Real Genius•’s Chris Knight, what they can do to us.

The conversational relationship between user and computer that Kemeny envisioned—treating the computer as a colleague and not as a dictator or even as an inanimate object that cannot be reasoned with—is essential to a free society. Without that understanding, the man-computer relationship is essentially a master-slave relationship with man in the position of slave. Computers are never afraid of people. But people are often afraid of computers, fearing that a wrong step will cause the computer to react by deleting their data or doing something unexpected. Kemeny would hate how people work with computers today—following prescribed steps that someone else has set up, always living in fear of doing something wrong if they step outside that strict series of steps.

This fear can and will be manipulated by bureaucrats to create a world where customers are little more than resources to be mined for their advertising value, and a society where citizens are subordinate to the capricious whims of a tyrannical bureaucracy. Where all communications are as divisive as a Facebook, and all our interactions with government as unyielding as a stereotypical DMV.

In response to The pseudo-scientific state and other evils: In 1922, following the first world war, G.K. Chesterton discovered to his dismay that the evils of the scientifically-managed state had not been killed by its application in Prussia. Unfortunately, it was also not killed by its applications in Nazi Germany.

- February 15, 2023: Our Cybernetic Future 2023: Entropy in Action

-

When I was growing up, a standard response taught by parents to young children was “sticks and stones may break my bones, but names will never hurt me.”

I doubt parents still teach that. There are a lot of assumptions in that advice that are no longer safe, notably that it won’t be taken as a challenge. The assumption then was that verbal exchanges remained verbal exchanges. Nowadays the assumption is that knifing a fellow student in the back is merely standard schoolyard play.

That was seriously said by the West Side Left, back in 2021.

Teenagers have been having fights including fights involving knives for eons. We do not need police to address these situations by showing up to the scene & using a weapon against one of the teenagers.

Their logic in letting kids knife kids would be perfectly understandable to Norbert Wiener. Yes, young teenagers have engaged in deadly fights for eons. It was hard work bringing our culture out of the barbarism of child violence. But there is a growing contingent today that doesn’t just want to ignore that without work we get barbarism, they welcome the slide backward into barbarism, even to the point of supporting kids knifing kids.

Without work, entropy always wins.

- Man and Machine

- As We May Blog

- Anti-Entropy

- Entropy in Action <-

- December 14, 2022: Our Cybernetic Future 1954: Entropy and Anti-Entropy

-

Having dealt with someone at the far cusp of the personal computer revolution, and someone at its conception, I’d like to look at someone in between. Like Vannevar Bush1, Norbert Wiener• did not use personal computers. They didn’t exist. Nor was there anything remotely like the Internet. But it was the Internet’s potential for degrading human communication that frightened him. Or, more generally, the vast speed-up of the coming communications technology divorced from any acknowledgment that communication is always subject to degradation and that progress is not natural.

- Man and Machine

- As We May Blog

- Anti-Entropy <-

- Entropy in Action

Norbert Wiener feared a faster and faster world with less and less content, because of a culture that more and more denies the existence of entropy. Entropy originated as a term from physics, for the level of disorder and randomness in a system. It’s the second law of thermodynamics, which, in simpler terms, means that everything tends toward disorder. Order requires that some form of work be added to the system. You can think of entropy as a measure of how jumbled up a jigsaw puzzle is. But the universe is not just a jigsaw puzzle that must be put back together—applying work to reduce the entropy in the puzzle. The universe is a jigsaw puzzle that continually falls apart, as if it were on a glass table in an earthquake. Work is required not just to keep it from getting more disordered, but to keep it from mixing up with the table, too.

- November 30, 2022: Our Cybernetic Future 1945: As We May Blog

-

In 1945, Vannevar Bush told us our future: fast computers attached to powerful networks, enabling nearly unimaginable individual creativity and research; and even more importantly, communications both with other people and with the vast wealth of human knowledge. As We May Think is possibly the most influential essay in the history of both science fiction and computers. I’m almost surprised that we didn’t name computers “memexes” given how influential As We May Think was in science fact and fiction.

- Man and Machine

- As We May Blog <-

- Anti-Entropy

- Entropy in Action

There are two aspects of this very famous and influential essay: what was happening, and what was going to happen, both the growth of knowledge and the advancement of computer science. Vannevar Bush didn’t use terminology we’re familiar with. It didn’t exist. The title of the essay was meant literally: he predicted that our thinking would change in the future. He predicted a hybrid, cyborg future, offloading repetitive thought to automated processes, and naturally integrating automated knowledge retrieval into the way we think on a daily basis.

As someone who can no longer remember anything without keeping my phone on hand, I resemble that vision.

“There is a growing mountain of research…” Bush wrote, and “we are being bogged down today as specialization extends…”. Important research had always run the risk of obscurity, and it was only getting worse.

Mendel’s concept of the laws of genetics was lost to the world for a generation because his publication did not reach the few who were capable of grasping and extending it; and this sort of catastrophe is undoubtedly being repeated all about us, as truly significant attainments become lost in the mass of the inconsequential.

Nowadays, the phrase “dumb terminal” is very vague. In Kemeny’s era, dumb terminals were devices meant solely for connecting to a central computer, that is, to a server. In fact, “dumb terminals” are computers. But they’re computers in the same sense as the timer on your stovetop or the receiver in your portable stereo is a computer. They are not general purpose and can only be interacted with in very limited pre-determined ways. You can set your timer; you can dial in a new station or press “play” to start a CD. But you can’t edit video on your stovetop nor balance your checkbook on your stereo.

More importantly, you can’t adjust your stovetop to your cooking preferences, and you can’t adjust your stereo to automatically switch stations on an annoying commercial break.

↑Modern BASIC is rarely interactive on modern computers; it’s been disconnected from the computer’s internal functions and must be interacted with through a programming environment that denies BASIC true interactivity. This is probably how it should be. BASIC is not the appropriate tool for a fully interactive dialogue with modern computers. But something like it is essential if we want a world where computers are colleagues rather than masters.

↑Today, high-density video is routinely transferred from servers to computers over the Internet. But that process uses “buffering” to transfer video faster than you can view it, to overcome momentary network slowdowns. You can’t buffer game video—the game server can’t know what video to send until the player makes their choices, and then it’s too late to buffer. It needs to respond to the player immediately.

↑This is unfair to end-of-the-world preachers. Very often they do acknowledge they were wrong, and that they miscalculated when the end of the world would come, even if they don’t acknowledge they were wrong that the end of the world would come. Malthusians usually don’t even acknowledge their calculations were wrong. They simply quietly update their predictions and pretend they never made the old predictions.

↑

futurism

- 42 Astoundingly Useful Scripts and Automations for the Macintosh

- MacOS uses Perl, Python, AppleScript, and Automator and you can write scripts in all of these. Build a talking alarm. Roll dice. Preflight your social media comments. Play music and create ASCII art. Get your retro on and bring your Macintosh into the world of tomorrow with 42 Astoundingly Useful Scripts and Automations for the Macintosh!

- John G. Kemeny at Wikipedia

- “John George Kemeny was a Hungarian-born American mathematician, computer scientist, and educator best known for co-developing the BASIC programming language in 1964 with Thomas E. Kurtz… [He] pioneered the use of computers in college education.”

- Review: Man and the Computer: Jerry Stratton at Jerry@Goodreads

- Man is in a symbiotic relationship with the computer; that the computer is not alive doesn’t matter. The distinction between living and inanimate matter is unimportant because of the features of this symbiosis.

- War and Anti-War: Making Sense of Today’s Global Chaos•: Alvin Toffler at Amazon.com (paperback)

- “Beginning with a provocative analysis of warfare in the past, futurists Alvin and Heidi Toffler offer intriguing insight into today’s military conflicts—and an eye-opening portrait of the battles of the future. By describing the horrifying realities of future war, the authors offer innovative strategies for implementing future peace.”

- Why should everyone learn to program?

- Anything that mystifies programming is wrong.

history

- Dartmouth Library: Visiting and History

- “The Dartmouth Library has a rich history that began in 1770. Come visit the José Clemente Orozco mural, the Dr. Seuss Room, and more.”

- Future Snark

- Why does the past get the future wrong? More specifically, why do expert predictions always seem to be “hand your lives over to technocrats or we’ll all die?”

- John G. Kemeny: BASIC and DTSS: Everyone a Programmer

- “The personal computer helped ‘distribute’ computing, which Kemeny thought was crucial to the progress of society. But it also diminished in importance the centralized computing power and the interconnectivity of users that time sharing made possible.”

- Learning to program without BASIC

- If BASIC is dead, how can our children—or anyone else—learn to program? Today people interested in programming have far more options available to get started hacking their computers.

- Real Genius•

- A movie about college kids that didn’t dumb down college to get its laughs. One of my favorite movies.

More Future Snark

- Future Snark

- Why does the past get the future wrong? More specifically, why do expert predictions always seem to be “hand your lives over to technocrats or we’ll all die?”

- Our Cybernetic Future 1945: As We May Blog

- As we go back in time for insight into the future, actual hardware recedes and the relationship between man and hardware comes to the fore. In 1945, Vannevar Bush laid out a vision of the Internet and desktop computers filled with the knowledge of mankind. And he recognized that this would not merely change how quickly we think, but how we think.

- Our Cybernetic Future 1954: Entropy and Anti-Entropy

- In 1954, Norbert Wiener warned us about Twitter and other forms of social media, about the breakdown of the scientific method, and about the government funding capture of scientific progress.

- Our Cybernetic Future 2023: Entropy in Action

- Studying the past, we can improve the future. Studying the futurists of the past, we can learn the tools to improve the future.

More John G. Kemeny

- Our Cybernetic Future 2023: Entropy in Action

- Studying the past, we can improve the future. Studying the futurists of the past, we can learn the tools to improve the future.

More Our Cybernetic Future

- Our Cybernetic Future 1945: As We May Blog

- As we go back in time for insight into the future, actual hardware recedes and the relationship between man and hardware comes to the fore. In 1945, Vannevar Bush laid out a vision of the Internet and desktop computers filled with the knowledge of mankind. And he recognized that this would not merely change how quickly we think, but how we think.

- Our Cybernetic Future 1954: Entropy and Anti-Entropy

- In 1954, Norbert Wiener warned us about Twitter and other forms of social media, about the breakdown of the scientific method, and about the government funding capture of scientific progress.

- Our Cybernetic Future 2023: Entropy in Action

- Studying the past, we can improve the future. Studying the futurists of the past, we can learn the tools to improve the future.

More programming for all

- 42 Astoundingly Useful Scripts and Automations for the Macintosh

- MacOS uses Perl, Python, AppleScript, and Automator and you can write scripts in all of these. Build a talking alarm. Roll dice. Preflight your social media comments. Play music and create ASCII art. Get your retro on and bring your Macintosh into the world of tomorrow with 42 Astoundingly Useful Scripts and Automations for the Macintosh!

- Internet and Programming Tutorials

- Internet and Programming Tutorials ranging from HTML, Javascript, and AppleScript, to Evaluating Information on the Net and Writing Non-Gendered Instructions.

- No premature optimization

- Don’t optimize code before it needs optimization or you’re likely to create unoptimized code.

- Why should everyone learn to program?

- Anything that mystifies programming is wrong.

- Smashwords Post-Christmas Sale

- Smashwords is having a sale starting Christmas day, and both Astounding Scripts and The Dream of Poor Bazin are 75% off.

- Two more pages with the topic programming for all, and other related pages